Getting started with Terraform and Infrastructure as Code

I recently worked with Terraform to codify IT infrastructure, i.e. server deployments, network configurations and other resources. Based on my working notes, I want to give an introduction on how to write infrastructure resource definitions and execute them using Terraform.

I’ll be using AWS as a cloud provider in my examples, but many more providers are available. In fact, one of the advantages of using a platform agnostic tool is that you can manage all your infrastructure in one place – not individually for every provider or on-premise platform you use.

As this is supposed to be a relatively high-level overview, we’re only going to create an EC2 instance and don’t involve other services or additional configuration management tools yet.

Prerequisites

If you want to follow along, you’ll need…

- an AWS account

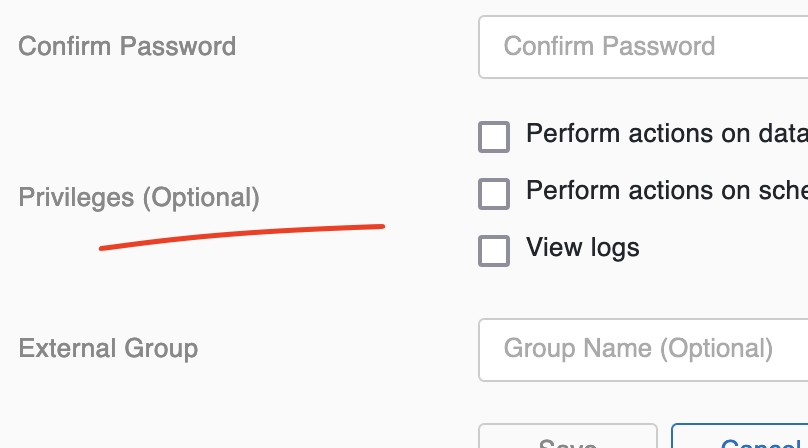

- an IAM user you’d like to use

- a default VPC for the AWS region you choose to use

- the

terraformcli tool installed (using a package manager, a pre-compiled binary, or by building it yourself)

The IAM user needs programatic access and should have permissions to create EC2 resources under your account. If you already have access credentials set up on your machine, I suggest adding the access key and secret for the new user as a separate profile in your credentials file (~/.aws/credentials).

It could look something like this:

[my-terraform-profile]

aws_access_key_id = ABC

aws_secret_access_key = DEF

For later reference, I’ve published a gist of the terraform configuration we are going to create in the next steps.

Why

Before we start setting up our initial configuration, let’s think about why would we would want to manage our infrastructure in code in the first place.

A couple of reasons:

- not having to manually configure each VM deployment and thus reducing manual configuration errors (this is even more relevant when you’re dealing with lots of different VMs in multiple networks, many different security groups, etc. – not cool to do by hand)

- making it easy to repeatedly and programatically set things up with a (hopefully) good baseline configuration

- having a clearly defined and visible configuration and state of your resources

- treating your infrastructure like your other code and benefiting from all the tools you’re already using, e.g. version control

In addition, codifying your infrastructure means you can (re)create and tear down resources very easily and basically not just start an idle resource when you need it, but actually only build it when you need it – which is exactly what we’re going to do in the next steps.

Having said that, not all is rosy. You still have many chances to mess up configurations and set up a whole park of insecure resources :-)

Main Terraform commands

For the following examples, keep in mind that we’re mostly dealing with these four terraform commands:

terraform initterraform planterraform applyterraform destroy

Basically, we’re going to init a configuration, plan the creation, and then apply it one or many times, and explicitely or implicitely destroy resources.

First configuration and initialization

Let’s create a folder terraform-ec2 with a file named main.tf. This is where our configuration, written in HCL (Hashicorp Configuration Language), goes.

main.tf:

provider "aws" {

profile = "terraform"

region = "eu-central-1"

}

We’re defining a provider with whom we want to interact to manage the resources. For AWS I’m using the profile “terraform”, which is the profile I defined earlier in ~/.aws/credentials, and the region “eu-central-1”.

Going to the directory and running terraform init will now make Terraform parse the file, check the defined provider and download a plugin for interacting with said provider (you’ll find it in .terraform/plugins).

The output will be something like this:

Initializing the backend...

Initializing provider plugins...

- Checking for available provider plugins...

- Downloading plugin for provider "aws" (hashicorp/aws) 2.62.0...

[...]

Terraform has been successfully initialized!

[...]

Naturally, you can do this for other providers as well and create resources in multiple locations.

Defining resources

Now that we know which provider to target, let’s define an EC2 instance and a security group:

resource "aws_instance" "my_vm" {

ami = "ami-0b6d8a6db0c665fb7"

instance_type = "t2.micro"

key_name = "terraform"

security_groups = [aws_security_group.ssh_http.name]

}

resource "aws_security_group" "ssh_http" {

name = "ssh_http"

description = "Allow SSH and HTTP"

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["x.x.x.x/32"] # make this your IP/IP Range

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

We’re defining a new EC2 instance in the first resource block and are naming it my_vm in this configuration.

ami refers to an ID of an Amazon Machine Image (AMI). We could make/pre-configure our own but to keep things simple in this tutorial, we’re going to use the ID of a public Ubuntu 18 image in the eu-central-1 region. Note that if you picked a different region in the provider configuration, you need to look for an AMI in your region instead (e.g. in the EC2 console -> Images -> AMI, or via the Ubuntu AMI Locator).

With instance_type we are choosing the vm configuration – in this case t2.micro, which gives us a 1 vCPU, 1 GB memory machine that is more than enough for playing around.

The key_name is another essential attribute. Here, we specify the name of a keypair that we previously created and uploaded to our AWS account (e.g. in the EC2 console -> Key pairs -> Import key pair). We could also create it in this configuration (resource "aws_key_pair"), but to not introduce too many new steps, we’ll be using an already existing one. Make sure to also have the private key readily available on your machine for later steps.

There are many more resources and configuration keys – have a look at the provider docs for more details. For now we are only going to define the security group before finally seeing what we are acutally creating with this config.

The security group is referenced in the aws_instance section by its name and defined in its own resource block aws_security_group (note that all aws resources have the aws_ prefix).

If you’re not familiar with security groups on AWS: it’s essentially a virtual firewall with rules for your ingress (inbound) and egress (outbound) network traffic.

So here we are defining an allow rule for inbound tcp traffic on port 22 and 80. If you don’t want to have 22 open to the world, add the IP address you’re coming from for the cidr_blocks key. “-1” in the egress section means “all”; the rest should be self-explanatory.

Plan execution

Now we’re finally ready to see what our configuration would do when being executed. Let’s run terraform plan, which will not yet create the resources but tell us if we have configured everything syntactically correct (there’s also terraform validate if you only want that), do a dry-run and then give us information that can be known from what we defined.

The output (shortened) will look like this:

[...]

Terraform will perform the following actions:

# aws_instance.my_vm will be created

+ resource "aws_instance" "my_vm" {

+ ami = "ami-0b6d8a6db0c665fb7"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

[...]

}

# aws_security_group.ssh_http will be created

+ resource "aws_security_group" "ssh_http" {

+ arn = (known after apply)

+ description = "Allow SSH and HTTP"

[...]

}

Plan: 2 to add, 0 to change, 0 to destroy.

+ here means adding. Later when things are changed, we’ll see - for removing or ~ for updating in-place. Many keys show “known after apply” as these values are only determined at execution time.

With our dry-run we can see that we would create 2 new resources. Let’s go ahead and run terraform apply to make it reality.

Apply

Since we didn’t store the previous execution plan, a fresh one is created and we’re asked for confirmation.

The output of applying our configuration will look something like this:

aws_security_group.ssh_http: Creating...

aws_security_group.ssh_http: Creation complete after 5s [id=sg-xxx]

aws_instance.my_vm: Creating...

aws_instance.my_vm: Still creating... [10s elapsed]

aws_instance.my_vm: Still creating... [20s elapsed]

aws_instance.my_vm: Creation complete after 29s [id=i-xxx]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

First, the security group was created and then the EC2 instance (which was depending on the group). We should now be able to ssh into the box with ssh -i our-private-key ubuntu@ip-of-new-instance.

Generally, dependencies are resolved automatically (as we have seen here), but can also be provided manually if Terraform cannot know them (depends_on).

Besides creating the resources, Terraform also created a local state file (terraform.tfstate) in our working directory. This file contains information about the state after creation (you’ll see that all previously unknown keys now have values) and is later used to check for changes to the real infrastructure and to know what to change/destroy when config adjustments are being applied. Generally, the state will always be refreshed (synced with the current actual state of your resources) when you plan/apply your next config. As a convenience, you can show the currently stored state with terraform show.

Change

For demonstration purposes, let’s change the instance_type to t2.nano:

resource "aws_instance" "my_vm" {

ami = "ami-0b6d8a6db0c665fb7"

instance_type = "t2.nano"

[...]

}

And now run terraform apply again:

# aws_instance.my_vm will be updated in-place

~ resource "aws_instance" "my_vm" {

[...]

instance_state = "running"

~ instance_type = "t2.micro" -> "t2.nano"

[...]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

We successfully changed our instance type and can also see that the terraform.tfstate file has changed (compare to terraform.tfstate.backup, which was automatically created).

Generally, Terraform will always tell you if an in-place change is possible or if it will destroy/re-create your resources (important in case you would need to extract data before destruction).

Destroy

Knowing what we created, we can now just as easily destroy all our resources by executing terraform destroy (again, after a confirmation of the execution plan).

aws_security_group.ssh_http: Refreshing state... [id=sg-xxx]

aws_instance.my_vm: Refreshing state... [id=i-xxx]

[...]

Destroy complete! Resources: 2 destroyed.

Further provisioning

While we could work with our own pre-configured images (instead of using a public AMI like we did above), it is also possible to define some initialization steps after creation of a resource – both locally (on the machine running the terraform command) and remote (on the created system).

Let’s keep things simple and just define a couple of remote commands that will be executed via SSH after creation of our VM. Note that this will only be possible if the machine is already configured to receive connections. If the remote service (such as SSH or WinRM) needs to be configured/started first, you might want to first have a look at cloud initialization configs, such as writing your script in a user_data section for EC2 instances. For the Ubuntu image we chose, everything is already set up for us.

Since we earlier configured our security group to allow inbound traffic at port 80, let’s install an Apache webserver on our instance by just using commands executed with the remote-exec provisioner for demonstration purposes.

Add the following block into the aws_instance resource block:

provisioner "remote-exec" {

inline = [

"sleep 10",

"sudo apt-get update",

"sudo apt-get -y install apache2"

]

connection {

type = "ssh"

user = "ubuntu"

private_key = file("~/.ssh/terraform")

host = self.public_ip

}

}

The inline steps will be invoked after resource creation and be executed over SSH using the specified key and the IP of the newly created instance. I’ve added a short delay as a quick hack to let the cloud init finish and prevent dpkg locks.

For further convenvience we’ll also add an output block at the very end of our main.tf that will show us the public IP (hint: you can also access these output values later on with terraform output as they are stored as part of the state file).

output "instance_ip" {

value = aws_instance.my_vm.public_ip

}

Let’s terraform apply and watch the install fly by. At the end, we get the public IP:

Outputs:

instance_ip = x.x.x.x

If everything worked well, navigating to that IP with a browser will show us the Apache default page.

Making it a bit nicer

Let’s destroy our resources again (terraform destroy) and then do one last thing before wrapping up: making the config a bit nicer by using variables, instead of sprinkling hardcoded values everywhere.

To create default values for our variables, we’re creating a new file terraform.tfvars (if named like this, it’ll be read by default):

ssh_source_ips = ["x.x.x.x/28", "x.x.x.x/32"]

ami_owner_id = "099720109477"

key = ["terraform", "~/.ssh/terraform"]

Here, we define two source CIDR blocks with a couple of addresses that we allow for SSH, an owner ID of the AMI we want and our keypair values from before (name and local path).

The AMI owner ID is that of Canonical (also see Ubuntu’s AMI locator) so that we can dynamically select the latest ubuntu 18 image (see the following changes) and make our script work for regions other than eu-central-1 (as we don’t have the hardcoded ami ID anymore).

This is how our final main.tf will look:

variable "region" { type = string }

variable "ssh_source_ips" { type = list(string) } # list of CIDR blocks

variable "ami_owner_id" { type = string }

variable "key" { type = tuple([string, string]) } # key_name and path to local private key

provider "aws" {

profile = "terraform"

region = var.region

}

data "aws_ami" "ubuntu_18" {

most_recent = true

owners = [var.ami_owner_id]

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-*"]

}

}

resource "aws_instance" "my_vm" {

ami = data.aws_ami.ubuntu_18.id

instance_type = "t2.micro"

key_name = var.key[0]

security_groups = [aws_security_group.ssh_http.name]

provisioner "remote-exec" {

inline = [

"sleep 10",

"sudo apt-get update",

"sudo apt-get -y install apache2",

]

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.key[1])

host = self.public_ip

}

}

}

resource "aws_security_group" "ssh_http" {

name = "ssh_http"

description = "Allow SSH and HTTP"

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = var.ssh_source_ips

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

output "instance_ip" {

value = aws_instance.my_vm.public_ip

}

output "chosen_ami" {

value = data.aws_ami.ubuntu_18.id

}

All variables are defined at the top. Besides source_ips, key and ami_owner_id, we have region which we haven’t given a default value in the terraform.tfvars file. Once we execute our config, we’ll be asked for a value (alternatively, we could also pass it with a -var flag with the apply command).

The image selection is now done in a data source block; we’re basically filtering for the most recent bionic image from owner Canonical and then using its ID in the instance block.

At the very end we additionally output the chosen AMI.

Let’s now run the new config by executing terraform plan. Enter a desired region and check the ami-id in the execution plan. It’ll differ when you run another plan and choose a different region.

Finally, apply the config and check that everything works. At the time of writing, for eu-central-1 the output should look like this:

Outputs:

chosen_ami = ami-0b6d8a6db0c665fb7

instance_ip = x.x.x.x

Explore further

Obviously, you can do much, much more and also do things better than outlined here. I hope my notes gave you enough information to start exploring Terraform, or “infrastructure as code” in general, further. Especially once you start dealing with more resources, you’ll appreciate this more transparent and more actionable way of managing resources. Like with any automation, it just feels good once everything is set up and works by just pushing a few keys :-)

Like to comment? Feel free to send me an email or reach out on Twitter.

Did this or another article help you? If you like and can afford it, you can buy me a coffee (3 EUR) ☕️ to support me in writing more posts. In case you would like to contribute more or I helped you directly via email or coding/troubleshooting session, you can opt to give a higher amount through the following links or adjust the quantity: 50 EUR, 100 EUR, 500 EUR. All links redirect to Stripe.